SSD Drives Promise

BlogSSD drives promise to enhance storage performance, but a new host-interface standard holds the key

By Kam Eshghi

http://www.networkworld.com/news/tech/2011/080811-ssd.html

Flash-memory-based solid-state disks (SSDs) provide faster random access and data transfer rates than electromechanical drives and today can often serve as rotating-disk replacements, but the host interface to SSDs remains a performance bottleneck. PCI Express (PCIe)-based SSDs together with an emerging standard called NVMe (Non-Volatile Memory express) promises to solve the interface bottleneck.

SSDs are proving useful today, but will find far more broad usage once the new NVMe standard matures and company’s deliver integrated circuits that enable closer coupling of the SSD to the host processor.

ANALYSIS: SSD could ultimately replace hard disk drives, Hitachi CTO says

The real issue at hand is the need for storage technology that can match the exponential ramp in processor performance over the past two decades. Chip makers have continued to ramp the performance of individual processor cores, to combine multiple cores on one IC, and to develop technologies that can closely couple multiple ICs in multi-processor systems. Ultimately, all of the cores in such a scenario need access to the same storage subsystem.

Enterprise IT managers are eager to utilize the multiprocessor systems because they have the potential of boosting the number of I/O operations per second (IOPS) that a system can process and also the number of IOPS per watt (IOPS/W) in power consumption. New processors offer better IOPS relative to cost and power consumption — assuming the processing elements can get access to the data in a timely fashion. Active processors waiting on data waste time and money.

Storage hierarchy

There are of course multiple levels of storage technology in a system that ultimately feeds code and data to each processor core. Generally, each core includes local cache memory that operates at core speed. Multiple cores in a chip share a second-level and sometimes a third-level cache. And DRAM feeds the caches. The DRAM and cache access-time and data-transfer performance has scaled to match the processor performance.

The disconnect has come in the performance gap that exist between DRAM and rotating storage in terms of access time and data rate. Disk-drive vendors have done a great job of designing and manufacturing higher-capacity, lower-cost-per-gigabyte disk drives. But the drives inherently have limitations in terms of how fast they can access data and then how fast they can transfer that data into DRAM.

Access time depends on how fast a hard drive can move the read head over the required data track on a disk, and the rotational latency for the sector where the data is located to move under the head. The maximum transfer rate is dictated by the rotational speed of the disk and the data encoding scheme that together determine the number of bytes per second read from the disk.

Hard drives perform relatively well in reading and transferring sequential data. But random seek operations add latency. And even sequential read operations can’t match the data appetite of the latest processors.

Meanwhile, enterprise systems that perform online transaction processing such as financial transactions and that mine data in applications such as customer relationship management require highly random access to data. Cloud computing also has a random element and the random issue in general is escalating with technologies such as virtualization expanding the scope of applications a single system has active at any one time. Every microsecond of latency relates directly to money lost and less efficient use of the processors and the power dissipated by the system.

ANALYSIS: 12 ways the cloud changes everything

Fortunately, flash memory offers the potential to plug the performance gap between DRAM and rotating storage. Flash is slower than DRAM but offers a lower cost per gigabyte of storage. That cost is more expensive than disk storage, but enterprises will gladly pay the premium because flash also offers much better throughput in terms of MB/sec and faster access to random data, resulting in better cost-per-IOPS compared to rotating storage.

Increasing flash capacity and reasonable cost has led to a growing trend of SSDs that package flash in disk-drive-like form factors. Moreover, the SSDs have most often utilized disk-drive interfaces such as SATA (serial ATA) or SAS (serial attached SCSI).

Today SSDs use disk interfaces

The disk-drive form factor and interface allows IT vendors to seamlessly substitute an SSD for a magnetic disk drive. There is no change required in system hardware or driver software. You can simply swap in an SSD and realize significantly better access times and somewhat faster data-transfer rates.

However, the disk-drive interface is not ideal for flash-based storage. Flash can support higher data transfer rates than even the latest generation of disk interfaces. Also, SSD manufacturers can pack enough flash devices in a 2.5-inch form factor to easily exceed the power profile developed for disk drives.

Let’s examine the disk interfaces more closely. Most mainstream systems today use second-generation SATA and SAS interfaces (referred to as 3Gbps interfaces) that offer 300MB/sec transfer rates. Third-generation SATA and SAS push that rate to 600MB/sec, and drives based on those interfaces have already found usage in enterprise systems.

While those data rates support the fastest electromechanical drives, new NAND flash architectures and multi-die flash packaging deliver aggregate flash bandwidth that exceeds the throughput capabilities of SATA and SAS interconnects. In short, the SSD performance bottleneck has shifted from the flash devices to the host interface. The industry needs a faster host interconnect to take full advantage of flash storage.

The PCIe host interface can overcome this storage performance bottleneck and deliver unparalleled performance by attaching the SSD directly to the PCIe host bus. For example, a 4-lane (x4) PCIe Generation 3 (G3) link, which will ship in volume in 2012, can deliver 4GB/sec data rates. Moreover, the direct PCIe connection can reduce system power and slash the latency that’s attributable to the legacy storage infrastructure.

PCIe affords the needed storage bandwidth

Clearly an interface such as PCIe could handle the bandwidth of a multichannel flash storage subsystem and can offer additional performance advantages. SSDs that use a disk interface also suffer latency added by a storage-controller IC that handles disk I/O. PCIe devices connect directly to the host bus eliminating the architectural layer associated with the legacy storage infrastructure. The compelling performance of PCIe SSDs has resulted in top-tier OEMs placing PCIe SSDs in servers as well as in storage arrays to build tiered storage systems that accelerate applications while improving cost-per-IOPS.

Moving storage to a PCIe link brings additional challenges to the system designer. As mentioned, the SATA- and SAS-based SSD products have maintained software compatibility and some system designers are reluctant to give up that advantage. Any PCIe storage implementation will create the need for some new driver software.

Despite the software issue, the move to PCIe storage is already happening. Performance demands in the enterprise are mandating this transition. There is no other apparent way to deliver improving IOPS, IOPS/W, and IOPS per dollar characteristics that IT managers are demanding.

The benefits of using PCIe as a storage interconnect are clear. You can achieve more than five times the data throughput relative to SATA or SAS. You can eliminate components such as host bus adapters and SERDES ICs on the SATA and SAS interfaces — saving money and power at the system level. And PCIe moves the storage closer to the processor reducing latency.

So the question the industry faces isn’t really whether to use PCIe to connect with flash storage, but how to do so. There are a number of options with some early products already in the market.

Stopgap PCIe SSD implementations

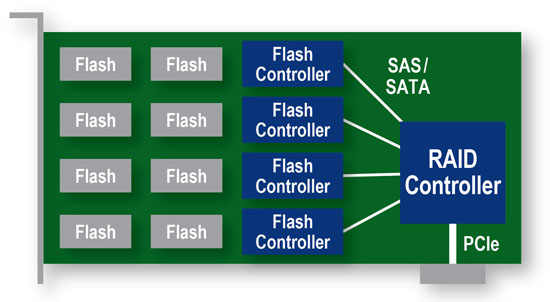

The simplest implementations can utilize existing flash memory controller integrated circuits that, while capable of controlling memory read and write operations, have no support for the notion of system I/O. Such flash controllers would typically work behind a disk interface IC in existing SATA- or SAS-based SSD products.

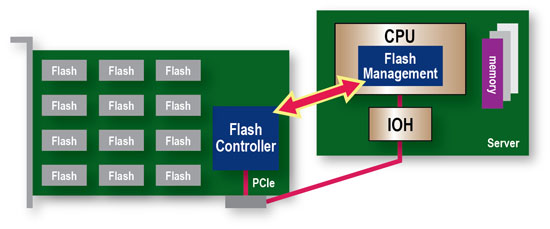

Alternatively, you can run flash-management software on the host processor to enable a simple flash controller to function across a PCIe interconnect. That approach is not ideal. First, it consumes host processing and memory resources that ideally would be handling more IOPS. Second, it requires proprietary drivers and raises OEM qualification issues. Third, it doesn’t deliver a bootable drive because the system must be booted for the flash-management software to execute and enable the storage scheme. And fourth, such an architecture is not scalable due to ever-increasing system resource demands.

Clearly in the short term, these designs will find niche success. Primarily today, the products are being utilized as caches for hard disk drives rather than mainstream replacements of high-performance disk drives.

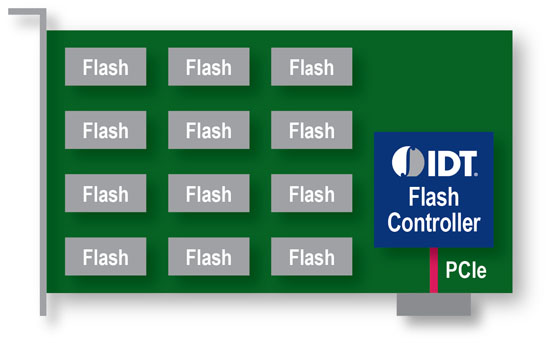

Longer term, a more robust and efficient PCIe SSD design will rely on a complex SoC that natively supports PCIe, that integrates flash controller functionality, and that completely implements the storage-device concept. Such a product would offload the host CPU and memory of handling flash management, and ultimately enable standard OS drivers that support plug-and-play operations just as we enjoy with SATA and SAS today.

The specification defines an optimized register interface, command set and feature set for PCIe SSDs. The goal is to help enable the broad adoption of PCIe-based SSDs, and to provide a scalable interface that realizes the performance potential of SSD technology now and into the future. The NVMe 1.0 specification may be downloaded from www.nvmexpress.org.

The NVMe specification is specifically optimized for multi-core system designs that run many threads concurrently with each thread capable of instigating I/O operations. Indeed, it’s optimized for just the scenario that IT managers are hoping to leverage to boost IOPS. NVMe specification can support up to 64k I/O queues with up to 64k commands per queue. Each processor core can implement its own queue.

In June 2011, the NVMe Promoter Group was formed to enable the broad adoption of the NVMe Standard for PCIe SSDs. Seven industry leaders will hold permanent seats on the board: Cisco, Dell, EMC, IDT, Intel, NetApp and Oracle. Six other seats will be elected from companies who are members of the NVMHCI Work Group.

There is still work to be done before NVMe becomes a mainstream technology, but the broad support among a range of industry participants virtually guarantees that the technology will become the standard for high-performance SSD interconnects. Supporters include IC manufactures, flash-memory manufacturers, operating-system vendors, server manufacturers, storage-subsystem manufacturers and network-equipment manufacturers.

Over the next 12 to 18 months, expect the pieces to fall in place for NVMe support as drivers will emerge for most popular operating systems. Moreover, companies will deliver the SoC enterprise flash controllers required to enable NVMe.

Form factors for PCIe SSDs

The NVMe standard does not address the subject of form factors for SSDs and that’s another issue that is being worked out through another working group.

In enterprise-class storage, devices such as disk drives and SSDs are typically externally accessible and support hot-plug capabilities. In part, the hot-plug capability was required due to the fact that disk drives are mechanical in nature and generally fail sooner than ICs. The hot-plug feature allows easy replacement of failed drives.

With SSDs, IT managers and storage vendors will want to stay with an externally accessible modular approach. Such an approach supports easy addition of storage capacity either by adding SSDs, or replacing existing SSDs with more capacious ones.

Indeed, there is another standards body that has been formed to address the form factor issue. The SSD Form Factor Working Group is focused on promoting PCIe as an SSD interconnect. The working group is driven by five Promoter Members including Dell, EMC, Fujitsu, IBM and Intel.

The form-factor group started in the fall of 2010, focusing on three areas:

- A connector specification that will support PCIe as well as SAS/SATA.

- A form factor that builds upon the current 2.5-inch standard while supporting the new connector definition and expanding the power envelope in support of higher performance.

- The support for hot-plug capability.

The building blocks are all falling into place for broader usage of PCIe-connected SSDs and deliverance of the performance improvements that the technology will bring to enterprise applications. And while the focus has been more on the enterprise, the NVMe standard will surely trickle down to client systems as well, offering a performance boost even in notebook PCs while reducing cost and system power. The standard will drive far more widespread use of PCIe SSD technology as compatible ICs and drivers come to fruition.